Newsletter #18

Hang around for some info on how to keep your job.

A word from the person behind the laptop

We’re back!

Its been a while since the last newsletter and not much have happened since… right? Except for the U.S. casually threatening to invade its own allies, AI models quietly reaching PhD-level intelligence, and the occasional asteroid hurtling toward Earth.

Anyway, work has been hectic, so this issue took a little longer than planned. But I finally had time to sit down and sort through my thoughts on the latest AI developments in the legal sector. The big buzzword is agents so that’s what we’ll look into.

Hang tight!

Agents, agents, agents!

Let’s just start with a quick intro to one of the best agent tools on the market right now. Replit has released a coding agent and I’ve been using up all my credits for the last many months creating demos of everything from ongoing liv stock market opportunity tracker to an Pokedex for Aussie animals.

This video shows what its all about:

But what are agents actually? While we’re still figuring out the real business case for traditional AI tools, a this new trend has already taken over - one that moves beyond simple prompt-response interactions.

So let’s first make sure we’re on the same page:

• ChatGPT interface (and its siblings Claude, Gemini, etc.): Reactive AI. You give it a prompt, it responds. End of story.

• Agents: Autonomous AI. They set goals, execute tasks without constant human input, and adapt based on feedback. Less “answering questions,” more “figuring things out and acting on them.”

Now, its easy to see what the business opportunity is here. AI solving entire workflows within a business then the big workforce replacement is truly coming! What techno-optimists (not the kind you meet in Berghain) dream of is something like this:

An AI agent that constantly runs in the background, proactively managing legal risk and streamlining workflows.

It monitors contracts and regulations, flagging potential compliance issues before they become problems. It automates routine tasks like drafting NDAs, reviewing vendor agreements, and tracking key deadlines. It keeps an eye on internal communications, alerting the legal team when an executive casually suggests something that might be non-compliant.

When a complex issue arises, the agent doesn’t just provide research - it synthesises case law, drafts a legal memo, and suggests a strategy, saving hours of work. It also remembers past cases and company policies, ensuring consistency across decisions and quality assurance.

Sounds promising (and maybe a little terrifying), but reality will look very different. Having worked in large corporations and law firms, I see plenty of obstacles to this futuristic vision even though I agree that agents have the potential to be a really valuable tool.

Let’s go through 3 of them here:

1. Unwritten rules

As a lawyer, it’s counterintuitive to think that some rules aren’t written down. But in many cases, that might actually be for the best.

Just not for agents. To be useful, AI agents need clear, structured rules to operate within - something that’s notoriously difficult in large organizations where so much runs on unwritten norms and tacit knowledge.

Lawyers know this struggle all too well. Sure, knowing the law is expected, but real effectiveness comes from mastering the unspoken rules - the politics, the billable hour dance, the subtle (but crucial) decision to cc the right email to the right partner at the right time so it doesn’t disappear into the abyss.

And here’s the problem: these rules are hard to define, let alone codify. Make them too broad, and the agent might start interpreting tasks on its own, leading to spectacularly bad decisions. Make them too strict, and it becomes useless, stuck in a rigid box with no flexibility. Striking the right balance is tough - especially when the “rules” aren’t written down to begin with.

In the latest newsletter from AI policy perspective Seb Krier, who works on the Public Policy Team at Google DeepMind, describes it as “grey knowledge”:

“Employees in most commercial organisations hold critical “grey” or institutional knowledge. This includes insights gained from informal sources, like podcasts, or a nuanced understanding of workplace politics. For instance, knowing how to navigate internal politics at work or recognizing which tasks are worth prioritizing under shifting external circumstances (e.g. political changes) is rarely written down. You won’t find an internal wiki entry that says “Avoid asking this person about X because they’re biased against it” or “The new minister hates automated vehicles, so highlight healthcare topics at the next event.” In human-agent workflows, this kind of contextual knowledge and knowhow gives humans a certain advantage, and presents a significant challenge to overcome before organizations can transition to agent-only companies.”

He also points out that this kind of tacit knowledge can become a form of job security, with employees - consciously or not - withholding or manipulating information to maintain their value. And he’s right. No one has to actively sabotage an AI agent for it to struggle - sometimes, the mere fact that key insights aren’t explicitly documented is enough to keep automation at bay.

That said, he believes agents will eventually overcome this challenge:

“This challenge is solvable - agents could infer and learn quirks over time if management provides enough access to contextual data. For instance, an agent might eventually learn, ‘This is the quirk to remember when submitting a finance request.’ However, this process will be slow and uneven, especially for roles requiring physical or social interactions.”

And that’s the key takeaway: it’s not a question of if, but when. AI agents can adapt to unwritten rules, but learning institutional quirks takes time - and in many cases, longer than businesses are willing to wait.

2. The human bottleneck

This brings me to my next point: the ever-annoying “human in the loop.” Oh, if only AI could just handle everything - no approvals, no oversight, no fragile human egos slowing things down. Wouldn’t life be easier?

As Tyler Cowen has pointed out that as AI advances, the real constraint on productivity isn’t always the technology itself - sometimes it’s humans. I recommend listening to some of Tylers points in this episode of the Dwarkesh Podcast where he also talks about why he writes books for AI to read(!?).

The point he’s making is that AI can analyze data, generate reports, and even make recommendations in seconds, but if those outputs require a human decision at every step, the speed advantage disappears. The AI moves at lightning speed; the human takes a week to “review and revert.” A system artificially bottlenecked by our limited attention spans, risk aversion, and deeply ingrained need to schedule follow-up meetings.

This is especially true in legal and corporate settings, where human approval processes aren’t just slow - they’re structurally necessary. Regulations demand human oversight. Liability concerns mean no one wants to be the person who let the AI sign off on a multimillion-dollar contract without review.

The question is how useful AI is if its constantly limited by so-called human bottlenecks. The fact is that the more we keep AI on a short leash (even for good reasons), the less useful it becomes.

If every AI-generated contract, memo, or decision requires human approval, we don’t get true efficiency gains - we just create slightly more polished drafts for the same slow-moving process. At some point, businesses will have to decide how to handle this.

For low-risk, repetitive tasks, keeping humans in the loop might be more costly than beneficial, making automation a no-brainer. But for high-stakes decisions - where a mistake could mean regulatory penalties or major liability - companies may decide that slower, human-driven workflows are still the safer bet.

At some point, though, the cost of hesitation will outweigh the risk of automation. Businesses that move too cautiously will watch their more agile competitors reap the productivity gains first. The challenge isn’t just removing the human bottleneck - it’s knowing when it’s too expensive to keep it in place.

3. Infrastructure

Right now, plenty of companies are selling AI-powered workflow automation, promising seamless integration with Gmail, Slack, and other APIs. There’s a big challenge for these companies in my opinion (at least when it comes to enterprise infrastructure).

Most of these solutions take a shallow approach to enterprise infrastructure. Large organizations don’t just run on neatly packaged SaaS tools - they rely on a tangled mess of in-house software, cloud platforms (Azure, GCP), legacy databases, and, inevitably, that one critical document saved on some guy’s laptop.

This. Takes. Time.

There’s no quick fix. You can’t just bolt an agent onto a system and expect it to work - you need to rethink entire workflows. What needs to be automated? Where does the agent pull context from? How do you keep the dataflow up to date? How do different systems communicate? The setup process itself can be so time-consuming that it only makes sense if the long-term efficiency gains outweigh the upfront investment.

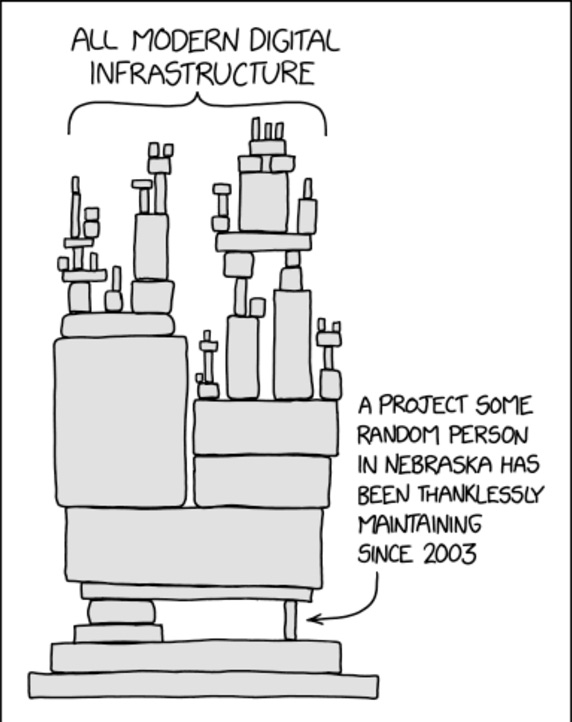

The Nebraska meme is old, but still holds water

But infrastructure is just one piece of the puzzle. AI agents don’t just assist; they observe and learn. A well-integrated legal agent, for example, wouldn’t just respond to prompts - it would track case law updates, anticipate relevant filings, and draft memos in a lawyer’s preferred style. Over time, it would become an extension of their thinking, catching mistakes before they happen and automating repetitive legal work. Again the data input would have to be consistent and updated to provide the right output.

I do think there’s a real opportunity here. A startup that targets a single, well-defined process - one that’s common across many companies - and builds a scalable agent workflow could carve out a strong niche. The key would be standardization: using the same APIs, tapping into the same legal sources, and integrating seamlessly with existing enterprise software. The result wouldn’t be a fully autonomous AI employee, but a highly effective, limited-scope agent that actually works.

Of course, this would still require significant effort - both in development and in navigating enterprise adoption. And pricing? That’s another challenge entirely. But if AI agents are going to move beyond flashy demos and into real business impact, this kind of focused, infrastructure-aware development is where progress is likely to happen first.

Where does that leave us?

As a person who has been working with AI adoption in the legal sector for at last many years I can say with a high degree of certainty that the current predictions are right, but the timeline is wrong.

Most companies will figure out how to standardize workflows, optimize data flows, and strategically remove human roadblocks will unlock serious productivity gains, but it will take longer because of the 3 reasons mentioned above (and several other tbh). And the first big winners probably won’t be legacy corporations trying to retrofit agents into outdated systems but leaner, AI-first startups that build around automation from day one - probably around one single process.

So, while the big workforce replacement isn’t happening overnight, the foundations are being laid. And if history is any guide, by the time most organizations realize what’s coming, it’ll already be here.

In other news…

California wants AI chatbot safeguards

California’s SB 243 would require chatbot developers to prevent “addictive engagement,” remind kids that AI isn’t human, warn parents about risks, and report on chatbot use linked to suicidal ideation. The bill follows lawsuits alleging AI chatbots contributed to teen suicides.

AI-Powered Reports for $200/Month

OpenAI’s new Deep Research tool generates research reports by analyzing hundreds of sources. It’s not real-time chat - reports take 5–30 minutes to compile, with citations.

While some praise its potential, critics warn of hallucinations, misinformation risks, and academic spam. Also missing from OpenAI’s promo? The huge energy cost - each report would obviously consume far more power than a typical ChatGPT query.

Italy Bans DeepSeek

Another Deep newstory. Italy has banned DeepSeek, OpenAI’s latest research-focused model, citing privacy and security concerns. Given its past ChatGPT ban, this isn’t shocking. Expect more fragmented AI regulation across Europe, making compliance a growing headache.

Start-up of the week

Here’s an interesting example of an AI agent in action: Zania AI, a compliance-focused tool that monitors regulations, automates audits, and flags risks before they escalate. Sounds great, but for me the big question is whether it’s too broadly defined to work effectively in complex, high-stakes environments? We’ll soon see. I’m excited to see what solution they come up with.

Extra toppings

With any luck, AI agents will finally kill off the never-ending parade of SaaS dashboards built solely to make manual tasks slightly less painful.

So “leave the law and start build something” would be the advice for all people into the legal sector nowadays