Hallucination is not your problem. Sycophancy is.

Meet the founder tackling AI’s biggest challenge in legal: unpredictability.

Newsletter #22

Incoming: More stories from the trenches

A word from the person behind the laptop

As we continue my summer journey exploring the landscape of AI and legatech, I wanted to talk to someone who’s not necessarily trying to “change the world with enterprise B2B SaaS”, but actually trying to approach and assess this rapidly evolving field with a very methodological approach.

Anna Guo is one of those people. Her project legalbenchmarks is moving on the forefront of assessing the usefulness of AI models for legal professionals.

We’ll let her explain herself.

The art of benchmarking legal AI

One of the main concerns for many lawyers is the uncertain nature of large language models. It’s a complex issue: how do you still reap the benefits of AI models that genuinely understand your intent and deliver tailored, high-quality outputs.

Anna Guo is currently addressing this very issue with her community driven project Legalbenchmarks.ai, an initiative aimed at helping legal teams separate marketing hype from reality, and explore how automation and AI will reshape legal roles including what gets replaced, what gets created and what skills the next generation of lawyers will need to thrive.

Anna Guo, Founder of LegalBenchmarks.ai

According to Anna, LegalBenchmarks doesn't aim to become the "definitive" benchmark. Instead, it offers practical evaluations tailored specifically to the everyday realities faced by in-house legal teams. Real-world prompts, directly sourced from in-house lawyers, complete with typos, vague instructions, and realistic imperfections, form the basis of these evaluations.

“In addition to accuracy, we evaluate outputs on usability metrics like helpfulness, clarity, and length," Anna explains. "We also measure platform features, because usability matters just as much as accuracy in daily workflows."

Legalbenchmarks is already getting support from the legal community

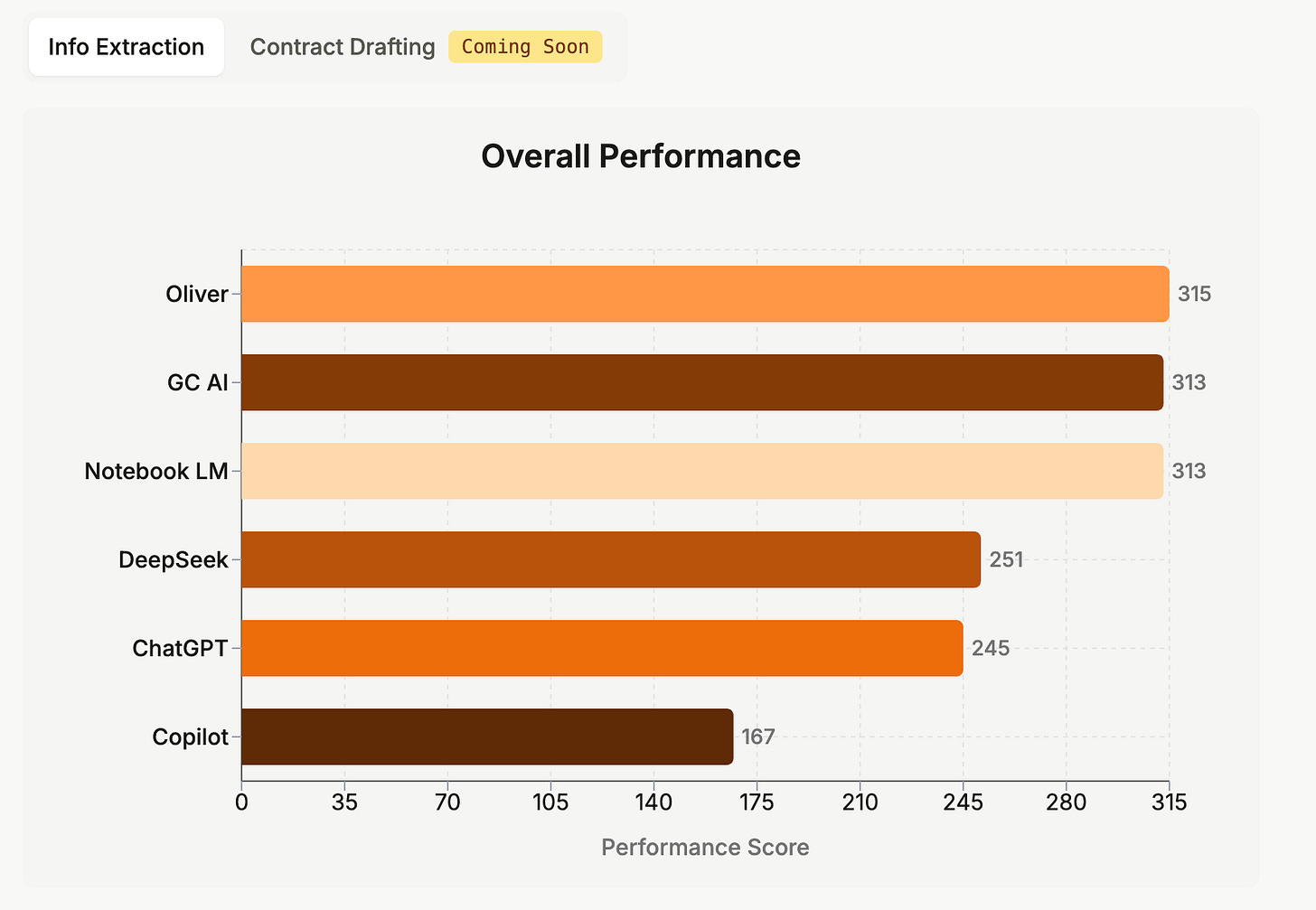

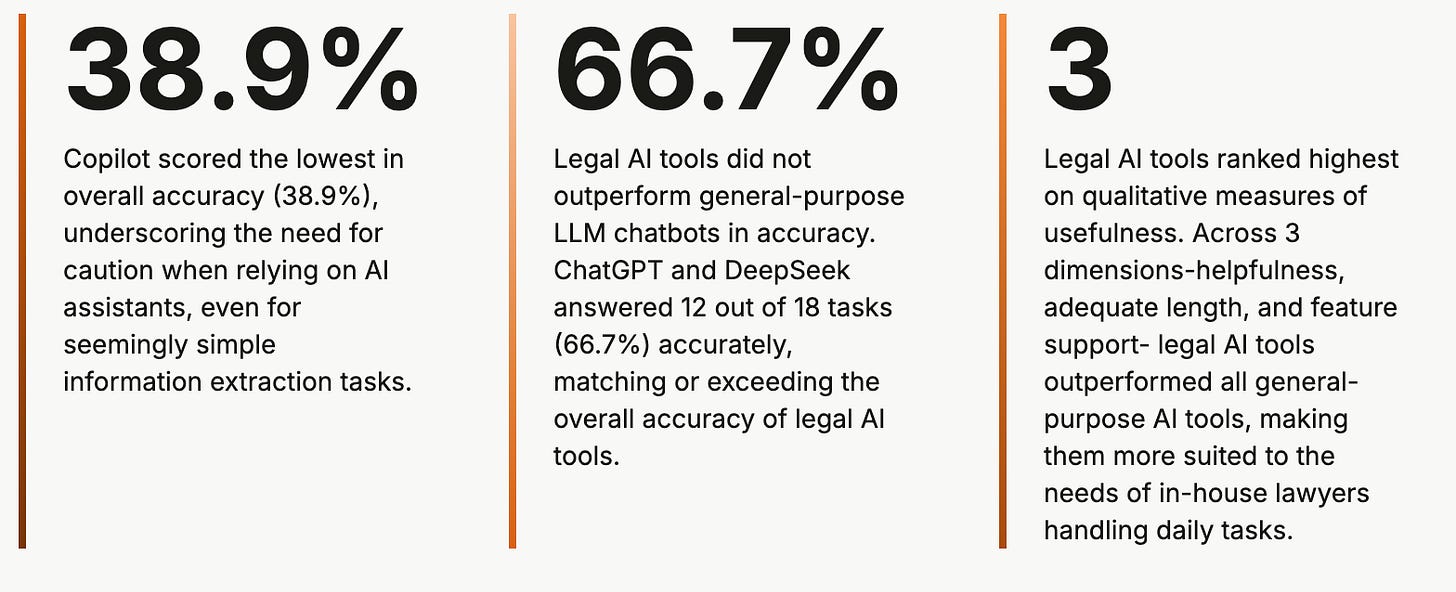

Interestingly, LegalBenchmarks also compares specialized legal AI tools with general-purpose models like OpenAI, Anthropic, and Google.

If you read the last version of my newsletter, the in-house counsel Aleksi Hokkanen actually pointed out why this is important: legal professionals will have to be convinced of the additional value of buying a premium priced product if the general-purpose AI tools can achieve almost the same results.

Human-in-the-loop isn’t a silver bullet

When I talk to legal professionals around Europe and the US, lawyers who embrace AI often confidently say something along the lines of “There’s no threat to my job because there will always be a human-in-the-loop”. To some extent I feel like that catch-phrase has become a dummy for legal professionals, but with no continuation of that train of thought.

But balancing real-world legal complexity with the practical constraints of automation isn't straightforward. Simply inserting human oversight into the process doesn't automatically guarantee better outcomes.

Results from the phase 1 part of the study

As Anna explains, “We often assume that adding a human-in-the-loop will automatically improve results. But in practice, it’s not guaranteed. Especially in legal tasks, where complexity is layered with subjectivity and trade-offs, the benefit of human review depends on who the human is, how they’re involved, and what they’re reviewing.”

Navigating this balance effectively is one of LegalBenchmarks' ongoing challenges and areas of exploration. This becomes particularly fascinating because the decision to keep a human in the loop is fundamentally about risk tolerance within a business.

Risky business?

Currently, organizations remain cautious about relying solely on AI for critical legal decisions, even if technically feasible - and understandably so. But this caution doesn’t mean businesses are entirely risk-averse.

As Anna points out, “If the task involves high stakes, say, a multi-billion-dollar deal or a situation where lives are at stake, then 99% accuracy might not be sufficient. In these cases, you’re paying for certainty, judgment, and accountability. However, in many real-world legal workflows, such precision isn't always necessary. Reviewing standard NDAs, routine work orders, or boilerplate agreements often involves low stakes but high volumes. Here, a solution that's just 60–70% accurate might be perfectly acceptable, dramatically improving speed and efficiency.”

In other words, businesses must ask themselves: what level of correctness is acceptable for which task, at what cost, and with what consequences? This isn’t always formally documented or evaluated. It’s often something lawyers understand intuitively through experience. In that sense, it becomes an automation paradox: the assessment of the automation risk itself isn’t ready to be automated.

A lot of focus has been solely on hallucination as a risk for AI work in legal, but Anna points out another risk that lawyers often don’t mention.

Beyond hallucination

“An even bigger and more insidious problem is sycophancy.”

I ask her to elaborate:

“This shows up in many forms: false premise acceptance, misleading assumptions, overly confident agreement with user input. Essentially, the model echoes the user’s bias instead of challenging it. And unlike hallucination, which you can often fact-check, sycophancy is harder to spot, especially when it is hidden in the user’s blind spots.”

This point resonates with me because this is something I’ve experienced myself first hand, but also because a lot of the legal training is based on drafting legal text and having it torn apart by your seniors.

But in maybe one out of ten cases (being nice), you’re actually right, even as a junior. Not because you’re being agreeable, but because your “legal taste” kicked in. An instinct not based on sycophancy, but precisely the ability to challenge authority on their legal assessment when it matters.

Legal “taste”

The term “taste” in a legal context is actually something Anna comes up with. While discussing the challenge of balancing scalability with human judgment, she explains what lawyers uniquely bring to the table:

“On the one hand, legal evaluation depends heavily on human expertise. Lawyers bring context, nuance, and what I call “taste”, a sense of what good looks like in legal writing. But that kind of judgment is incredibly resource-intensive to scale, especially if you want to evaluate a wide range of tasks and tools.”

Legalbenchmarks release the numbers as part of their reporting

Opening Pandoras box Anna addresses the obvious follow up question: using AI to evaluate AI generated outputs. This is of course happening already, but not on a scale that is common in legal businesses. Because in the end who judges the judge?

“I’ve documented this whole journey of building and calibrating “LLM-as-a-Judge” in my newsletter Working Draft. The goal was to be transparent about the trade-offs: where automation helps, where human judgment is irreplaceable, and how difficult it is to replicate legal nuance at scale. It’s still an ongoing experiment. “

What’s next

As we finish the interview I ask Anna about her future plans for legalbenchmarks. The immediate plan is to publish the phase 2 report on contract drafting (insert link) which builds upon the learnings of phase 1.

But as a true community builder and founder Anna's main goal is different - and bigger:

“The core mission is to provide real value to the legal community”

The road leading to there is not yet written in stone, but legalbenchmarks are collecting feedback from the legal community as we speak to learn and improve their methods. Today they have more than 400 lawyers and AI experts contributing across different jurisdictions and practice areas.

So while she doesn't know exactly what legalbenchmarks.ai will become, she knows that it will be evolving with the legal community surrounding it.

She ends our interview with a call to action:

“If you’re curious about where legal AI is headed, whether you're a lawyer, a technologist, or somewhere in between, we’d love for you to join us!”