Newsletter #15

An introduction to German geography? Keep on reading

A word from the person behind the laptop

I can’t help but wonder if the Brothers Grimm, with their wild imagination, ever envisioned that the dark forests and twisted tales they conjured would one day inspire the name of a generative AI company.

Is this image AI generated? Yes/no?

An AI company, mind you, that’s now cranking out unhinged images of copyrighted cartoon characters wielding semi-automatic rifles and promoting drug and alcohol abuse - all backed by a billionaire who dabbles in spaceship construction… Probably not.

Anyway, that’s the theme of the newsletter this week.

Enjoy.

In The Hall of the Mountain King

I have a friend who once told me that after a big night out, he’d always play ”In the Hall of the Mountain King“on his ride home from a night out, speeding up on his bike to amp up the drama. It’s like creating your own personal epic, where your drunken legs propel you through the city, making you the narrator of a late-night fairy tale come to life.

Using the Stable Diffusion model of Black Forest Labs gives me that same vibe. No wonder the dramatic Schwarzwald in Southern Germany has inspired countless fairy tales, and it should be no surprise that a GenAI company would tap into that magic when naming their operation. Their latest Stable Diffusion model creates results that make the images that your boomer friends and family post on Facebook look like the sketch of a 4-year old. So clearly there’s a new AI powerhouse in play here.

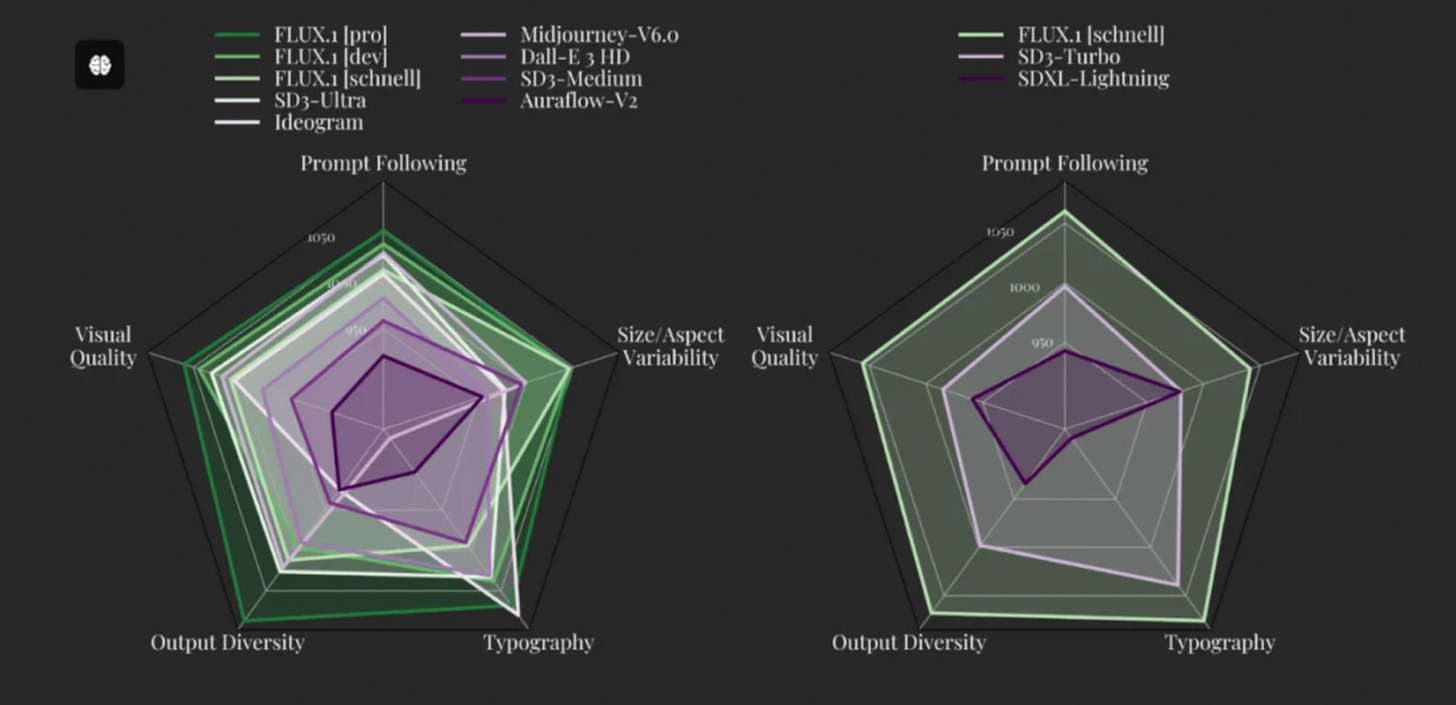

The test result of BFLs latest Stable Diffusion Model look better than the other actors in the market

But Black Forest Labs isn’t a new player in the AI world. They’re led by Robin Rombach, Patrick Esser, and Andreas Blattmann - the original brains behind the Stable Diffusion models that flipped open-source image generation on its head. Their work on latent diffusion models laid the groundwork for Stable Diffusion and influenced key design elements in heavy hitters like DALL-E 2, DALL-E 3, and Sora - and they just raised a Seed round of $31 million from Andreessen Horowitz.

So, why am I rambling about the Schwarzwald, Stable Diffusion models, and my friend’s drunken bike rides home? Because this has the potential to crank up the legal drama in the AI image generation game by more than just a notch.

Elon entered the chat

First things first: How did Black Forest Labs all of sudden get such massive attention? Well, their Stable Diffusion model is now powering the image generation engine behind Twitter 2.0 (also known as X). The AI model, named Grok (don’t ask), is Twitter’s take on the many LLMs out there, but now they’re dabbling in image generation too. And to pull this off, they teamed up with Black Forest Labs.

Grok is reportedly modeled after Douglas Adams' humorous science fiction novel The Hitchhiker's Guide to the Galaxy.

What’s worth noting here is that this model has far fewer safeguards compared to other Stable Diffusion models on the market. This lack of guardrails has unleashed a wave of wild and downright disturbing images - everything from school shootings to blatant copyright infringements. OSINT Analyst Oliver Alexander has been going through these questionable outputs on Twitter, and trust me, they’re NSFW.

As he rightly points out, this is an open-source tool that anyone can use however they like.

But there’s a big difference when it comes to an individual generating images and a company making the technology available for millions of users worldwide. Just look at what happened when Google released Geminis image generating function.

So what’s the legal drama?

Let’s break down the legal drama. First off, the question everyone’s asking: Can you really put such powerful image generating technology in the hands of a bunch of Twitters crypto bros? Seems like a Monkey JPEG disaster waiting to happen.

This is a free Monkey JPEG. Sell it and make a profit.

But jokes aside, the real legal mess here is twofold: IP/Copyright and safeguards. However, they’re really two sides of the same coin, as proper safeguards can help prevent IP and copyright infringements in the first place - but it's often discussed separately because the legislation addressing IP issues are already being addressed in court.

As you already know, AI needs a ton of training data to produce high-quality results, and a lot of that data is copyrighted. This raises big questions about the legality of collecting and using this data and what happens when these AI outputs start looking a lot like (or straight up copy) someone else’s work. Grok is already spitting out images that have put the lawyers of Disney, Warner Bros, and Nintendo into a hyperloop (pun intended) of infringement cases.

The IP and copyright issues are obvious, and the landscape is changing. Just to repeat myself from an earlier verison of this newsletter:

“Notable examples include “Authors Guild v. Google” and “Perfect 10, Inc. v. Amazon.com, Inc.”, where the outcomes were surprisingly favourable to the tech giants. Some legal experts argue that these cases could have easily resulted in less favourable outcomes, impacting the trajectory of digital copyright law significantly. For instance, in “Authors Guild v. Google”, the court ruled in favour of Google’s book-scanning project, determining that it qualified as fair use.“

This also means that the approach of Ex-Google CEO Eric Schmidt that says successful AI startups can steal IP and hire lawyers to “clean up the mess” might not work as well as it used to. Let’s not forget that this is a guy who says you still need to show up at the office to be productive even though research shows the opposite. Strong 00’s vibe.

The Verge brought the story with the Eric Schmidt quotes that have now been taken down.

In my opinion what’s interesting here is the collision between the so-called "ultimate free speech platform" and the AI safeguards.

This same conflict plays out across many areas of society, which is why the absolutist approach might not play out as nicely as it sounds. Twitter 2.0 will have to limit content on the platform and the Grok output, which flies directly in the face of the worldview Musk keeps promoting. Not that there’s anything wrong in promoting free speech on SoMe platforms btw, but the odds are 100 % that you’ll end up sounding like a hypocrite when you remove/prioritise content based on your own beliefs.

As Jacob Mchangama, CEO of the Future of Free Speech Project at Vanderbilt University, pointed out when Elon bought Twitter in 2022:

“In short, there is a compelling case to be made for why free speech should be strengthened, not weakened on social media. But the skeptics are unlikely to be persuaded by a conception of free speech based on partisan grievances and trolling. Instead, Musk should focus on demonstrating how the benefits of a more robust commitment to free speech on Twitter will outweigh the harms.”

If Grok turns into a partisan AI mouthpiece pushing Elon’s worldview, we’re not just stuck where we were before - we’re actually worse off. Now, that partisan view is AI-fueled, raising the stakes and complicating the landscape even further.

The real question is whether Twitter and Black Forest Labs can craft a Stable Diffusion model that both respects the legal framework and still champions free speech. My guess? Probably not.

In other news…

Finally some law enforcement on deepfakes

San Francisco City Attorney David Chiu has filed a lawsuit against the owners of 16 top nonconsensual deepfake porn sites on behalf of the People of California. The suit claims these companies violated state and federal laws against deepfake pornography.

New privacy regulation making (brain) waves

Colorado just made history as the first U.S. state to pass a privacy act that includes neural data under the category of “sensitive data” protected by law. This move sets a new precedent in data privacy, recognizing the importance of safeguarding the most personal and intimate data - our thoughts.

Start-up of the week

No real website yet, but YC-backed startup Promptarmor is already making waves by doing something refreshingly simple: explaining how their solution works. While most startups drown you in buzzwords and vague promises, Promptarmor offers a clear breakdown of their approach to safeguarding AI. No one currently knows what the need for a solution like this will be, but my guess is that they’re on to something.

Extra toppings

Talking of copyright infringements…